You may have heard about upcoming enhancements to Data Factory. Back in September we talked here about some of the architectural differences between SQL Server Integration Services (SSIS) and Azure Data Factory Version 2 (ADF V2), and the questions to pose as you try to select one of these products for data processing work. Well, the latest enhancements are out and they are exciting.

Data Flow

SSIS has always delivered two key features to ETL development: a graphical user interface for building data transformations, and the metaphors of a control flow and a data flow in creating packages, which act like small programs. A control flow is a series of separate tasks that you can chain together into a visually authored program, and a data flow is a similar, visually authored pipeline that processes rows of tabular data through transformations, filters, lookups, joins, and so on. Data flows are always called from control flows, generally in the context of some larger process. This metaphor is so commonplace to users of SSIS that it’s sort of like the water we swim in – and when initial versions of ADF arrived without this, it was puzzling for some.

Never fear, though – because the team at Microsoft knows how successful the control flow and data flow concepts are, they have been actively phasing it into ADF. The first version of Data Factory was based on moving data using a tumbling time window approach, but V2 brought the introduction of a Control Flow (June 2018). Soon, the Data Flow* feature will also become generally available. Since September we’ve been able to trial the data flow features in a preview. It’s worth kicking the tires, and it looks really promising.

How is this Different? (Enter Databricks)

While the visual metaphors and the ease of use look similar to SSIS, the technology under the hood here is radically different.

SSIS works, at a high level, by the visual editor building your packages as XML files. There’s an executable dtexec.exe that can read, parse, and execute those package files. Over the years different flavors of this have come along, such as Project Deployment in the SSIS catalog in SQL Server, but the fundamental architecture is the same. It works well, but it’s not necessarily cloud-optimized, and it’s a bit limited in terms of taking advantage of new innovations that have been created in the big data world – especially with respect to parallelism/massively parallel processing (MPP)/multi-machine scale-out.

The approach in ADF V2 is sort of 180 degrees from SSIS – it starts with the premise that data is going to be processed on a Spark cluster (specifically an Azure Databricks cluster, which is a specific flavor of Spark) in the cloud, and the engineering of the data flow ADF components is all about making it easier and more intuitive to harness that massive processing horsepower.

We’ll return to how it does that in a second, but first, why is it important? Well, it means that we get two wonderful capabilities right in the product:

- Automatic scaling to fit workload requirements. We don’t have to buy machinery big enough to handle the peak, maybe infrequent workload, and the infrastructure can be tailored for best fit, all the time.

- On top of auto scaling, there’s the opportunity for this system to do execution optimization on your code. Where SSIS will reliably run the packages you feed it, it does little in the way of tuning or optimizing those, and they just run in the sequence that you’ve written. Think about SQL Server’s Query Optimizer – it’s not an exact match, but Spark has some similar features that enable it to do optimization of code that you provide. Because ADF is deliberately built over the top of these cluster technologies, we get them with no additional or special effort. That’s huge.

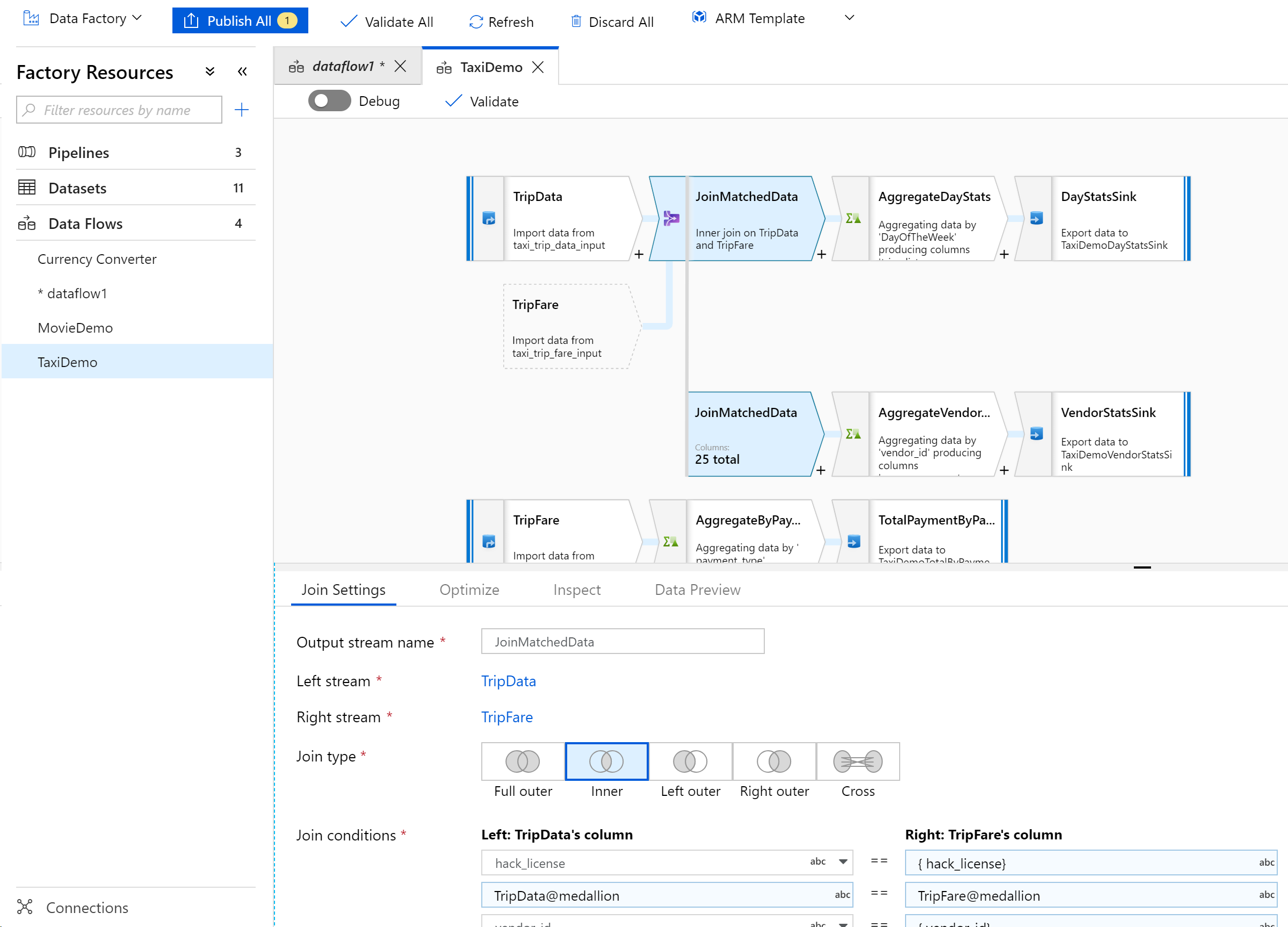

ADF Data Flow Design

There are several excellent, existing walkthroughs out there showing how to build your first data flow, so I won’t take you through that, but I do want to talk about how ADF harnesses the different technology in this new world.

If you try ADF Data Flows, you’ll find the visual editor looks cosmetically different, but conceptually is much the same as SSIS, so the learning curve should be short. Many SSIS transformations map directly to equivalents in ADF, such as joins, filters, branching, derived column expressions, and so on. What’s happening behind the scenes is quite different, though.

First, you’ll be working in a browser instead of a desktop tool, but it’s a fully featured authoring experience. Second, the Data Factory setup will compose and store your Data Flow as a JSON object (think: a modern version of the SSIS XML file).

Third, and this is the new bit: Data Factory will automatically compile your work into ready-to-run code for Apache Spark, on a Databricks cluster – with no additional effort from developers. This is really the vital difference: ADF V2 Data Flow is, in some sense, a visual editor to enable you to “write” code for a Databricks/Spark cluster without code-writing. This means it can handle huge sets of data with a lot less time and energy invested in infrastructure concerns. Sure, there is some important knowledge a team will need to gain to configure the cluster correctly, but it’s dramatically faster and easier than the old days of building out physical Hadoop clusters with their administrative workload, and it’s much easier for an ETL developer to take advantage of the power of that underlying technology.

New Decisions

So how does this change the math for SSIS vs. ADF from our last installment? Until now, the fact that ADF didn’t have the equivalent of the SSIS data flow was a barrier for some teams and some types of workloads. Once this feature becomes generally available, that barrier will disappear and the ADF service will become viable for teams who:

- Want to work with a visual/GUI editor as opposed to writing traditional source code

- Need that “T” in the E “T” L – that is transforming the data during its trip to the destination system, as opposed to having to stage it first and then transform it (ELT)

- Want to take advantage of modern/better scaling and parallel processing that comes with access to a Spark cluster, with an easier learning curve than building out a cluster

| ETL pattern (transform in flight) |

ETL pattern (load first, call functions in the destination to transform the data) |

|

| ADF V2 | Limited EXCELLENT | Excellent |

| SSIS | Excellent | Excellent |

That said, as you can see, this is a cloud service-centric idea. You will still need, as before, at least a hybrid on-premises/Azure environment to take advantage of this new feature. The processing will be performed in Azure. If you are truly constrained to on-premises systems, then SSIS may still be your best option.

One other adjustment to consider is that this new architecture really favors the inclusion of a data lake in your overall plan. ADF is designed for, and works really well with, a cloud-hosted data lake. Where SSIS architectures often transport data straight from source database to destination database, consider using a lake to land your data, and keep copies of the raw data files for different potential use cases.

Finally, a word about cost. If you choose a traditional, on-premises SSIS deployment, you will probably run that under some level of purchased SQL Server license, while for ADF you’d pay on a typical cloud-service model, with the usual differences between the two. One tends to be a one-time, sunk cost, while the other you pay for as you go, and can turn off if needed. Because Data Factory V2 is based on this pay-per-use model, estimating the total cost for this new tool requires a bit more detail about how your solution will work, which translates into a monthly operational cost estimate**.

The details of pricing for ADF V2 existing (meaning already generally available) features are published, but for the yet-to-be-released data flow feature, are not formalized as of this writing. However, if we look at the ADF V2 pricing examples we can at least get some sense of how to think about this. Each operation in ADF has a small incremental cost, so the total bill will be the sum of executions of those over time. Some of these operations are timed, especially data movement, so there’s an impact derived from the volume of data (represented as Data Integration Units or DIUs). In addition, Data Flow uses a Databricks cluster in the background, which might be spun up on demand or left on, depending on the frequency of your loads. Finally, the cluster itself can have different scaling depending on the quantity of data you have to process, which probably will also have some cost implication.

So give Data Flows a try in Azure Data Factory – as Microsoft rolls these Control and Data Flow concepts into the service, it’s rapidly becoming a compelling, modern service for all kinds of ETL work.

* Not to be confused with Power BI Dataflow.

** If you go down the path of blending both these services by running SSIS packages inside ADF V2 using the Integration Runtime, then the SSIS components and required SQL database do have a cloud-pricing model, as well.