I recently got to work with a big-city airport to help them architect and begin implementing a modern data platform on the Azure Government Cloud.

The client had the following set of requirements/restrictions:

- Needed to use the Azure Government Cloud

- Was willing to use/purchase SQL 2016 licenses

- Wanted users to consume Power BI reports

- Had lots of data they wanted to land and archive, but not necessarily bring into a data warehouse just yet

Some of the highlights and challenges of the final implementation are covered below.

Working in the Azure Government Cloud

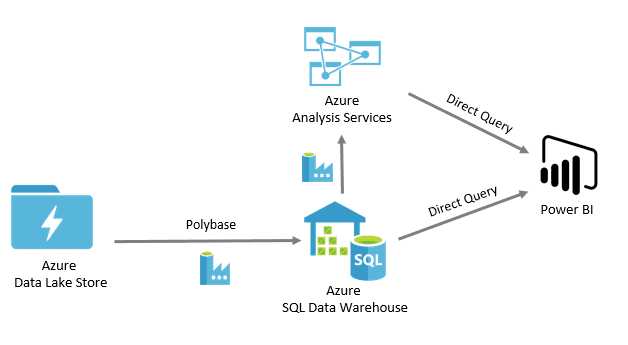

Working in the Azure Government Cloud poses an interesting challenge. It usually falls behind the commercial cloud in functionality and services due to hurdles surrounding a more stringent certification process. For example, a typical commercial cloud analytics Azure platform might look something like the diagram below:

But as of now, June 2017, Data Factory and Data Lake Store are not available in the government cloud. Another drawback? The broad Power BI offerings under the commercial Azure cloud umbrella are considerably narrower in the government version. So, to accommodate the client’s requirements, and make the most of a more restrictive environment, we employed a little creativity.

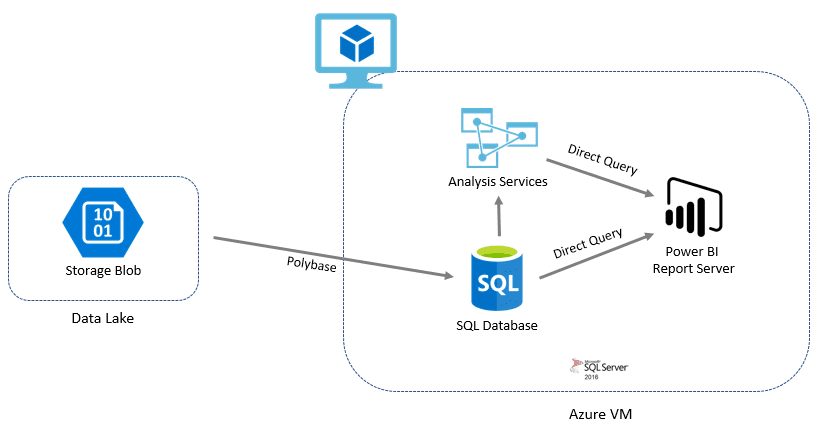

That resulted in an architecture that looks more like the following diagram:

Blob Storage for a Data Lake

Because the Azure Data Lake Store was not available in the government cloud, we used Blob storage to create a data lake. This provided an efficient means to land and archive data. Additionally, the size of the data (both current and future) did not come anywhere near the limits imposed by the storage service. Blob storage also integrates well with all the other Azure Data platform services. The downside to using Blob was the fact that it does not support Azure Active Directory for authentication. The concern here was minor though, as the client did not anticipate many (if any) users needing to go directly to the flat files stored in the data lake.

Using SQL 2016 for the Data Warehouse

With some of the key Platform-as-a-Service options, like Azure Data Factory and Azure Analysis Services, not available in the government cloud, SQL 2016 on a virtual machine became very appealing as the core of the data warehouse.

The SQL database engine was used to house the data mart, and by staging the data in Blob storage, PolyBase could be utilized to load the data warehouse. This allowed the client to a) store most of the raw staging data using cheaper file storage as opposed to storing it in the SQL database, and b) allowed a way for users to utilize SQL and external tables to browse the data lake archive. In-memory columnstore indexes were also applied to help serve up data to the reporting layer.

In the absences of Azure Analysis Services in the government cloud, Tabular Analysis Services was used on the SQL 2016 VM. This worked great, as the client was already using Power BI, and was optimistic about the ease of translating their Power BI models into Tabular, server-side models.

SSIS was used for automation. The client would have liked to use Data Factory, but again, it wasn’t available in the government cloud. SSIS is a mature, well-proven product that met any use-case the client had. A complication with using SSIS (as opposed to Data Factory) was that it meant the client had to have some sort of site-to-site VPN connection established in Azure to pull from on-premises data sources (or have disconnected processes push data into Blob storage). This would have been different with Data Factory as a gateway would have been all that was necessary.

Finally, the client opted to use the new Power BI Report Server to publish and consume SSRS and Power BI reports. This was ideal for them because they currently use SSRS and want to begin using Power BI, and their data models would be housed in Tabular Analysis Services (which at the time of the post was the only data source supported). As all their initial use-cases were a pure publish-reports-to-consumer scenario (as opposed to the need for collaborative, self-service functionality from the portal), the scope of functionality in the Power BI Report Server was sufficient. They also understood that if their use-cases changed over time, they would be able to easily migrate to the Power BI Cloud Service.

Final Thoughts

When architecting solutions in the Azure Government Cloud, you must be aware of the differences in the availability and functionality of services compared to the commercial cloud. This can make it more challenging to put together the best possible solution. Hopefully this example helped illustrate that an effective and efficient data platform can be put together in the government cloud without sacrificing functionality and security. BlueGranite specializes in the challenge of taking your company’s unique use-cases and requirements and putting together an optimal architecture to support both your functional and financial needs. Contact us today to learn more about how we can help.