Over the past couple months, we’ve highlighted some of the most useful and popular Microsoft Cognitive Services such as Search, Vision, Speech, and Language. Our final post in the series is about the Knowledge service and its capability to intelligently deploy resources like Question and Answer (QnA) and FAQ content where they are needed most. In this article, we’ll cover some of the basics for getting started, and some examples of how this service can be used by itself and in combination with other Azure components. Before we get into the details of the Knowledge service, let’s review the business value of AI and Cognitive Services in general.

Microsoft’s Cognitive Services provide artificial intelligence (AI) capabilities – which is essentially the ability of a machine to learn from experience and perform a task normally requiring human intelligence. The most popular AI applications are around vision, speech, and text processing – such as identifying objects in a picture, translating speech to text, or extracting the most common or useful parts of a body of text. I like to think of this as converting unstructured data into structured data that is more easily mined for information and insight.

These capabilities represent tremendous value, but have traditionally been difficult to deploy directly in support of a business function. They are complex and expensive to develop manually (usually requiring data scientists) and can be challenging to integrate into applications. Microsoft Cognitive Services relieves this pain by acting as the central intelligence components of an AI Oriented Architecture on Azure, where the complex data science elements come pre-built, you can couple many different services together, and easily deploy the architecture where your customers are most likely to use it. It’s a microservices pattern for AI.

I’ll use a crawl-walk-run approach to show how your organization might use the Knowledge Cognitive Service in increasingly powerful ways.

Crawl

Writing this blog was my second opportunity to use MS Cognitive Services. I’ve used the Vision service with a client before and was very impressed with how easy it was to get started and demonstrate accurate object recognition in photos in just a couple hours. I had a similar experience with the Knowledge service, and as a bonus, got to learn about and create a Bot! The Knowledge service consists primarily of the QnA Maker, which “enables you to power a question and answer service from your semi-structured content like FAQ (Frequently Asked Questions) documents or URLs and product manuals. You can build a model of questions and answers that is flexible to user queries, providing responses that you’ll train a bot to use in a natural, conversational way.”

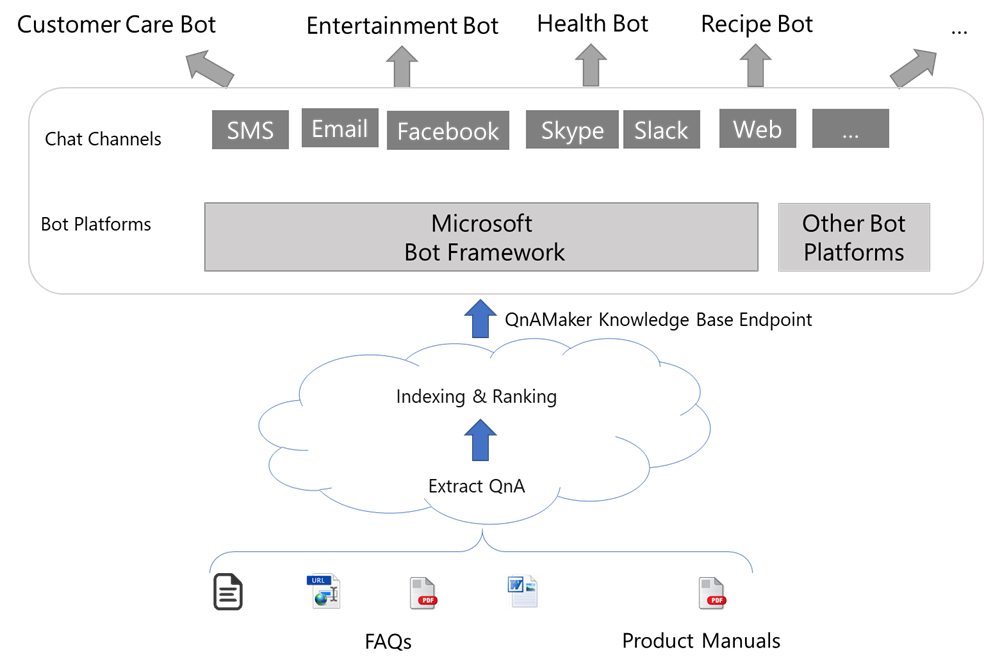

The value here is taking a variety existing knowledge resources – whether they are on a website, in product manuals, text files, or other documents – then putting them all together to form a unified and interactive question and answer service readily available to users. This is a great way to help employees or customers quickly find answers they need about products or services across distributed sources, without using valuable human resources to answer repetitive questions. Here’s a sample architecture:

I developed my own QnA Maker service and here is my experience. I got started at QnA Maker. If you’d like to create your own service, make sure you have an Azure account (free trials are available). Once logged into the QnA Maker portal there are three primary tasks, all accomplished without coding and using nice user interfaces (UIs) in the QnA and Azure portals:

- Create a QnA service in Azure. This provides the engine to extract question and answer pairs, as well as publish your service endpoint.

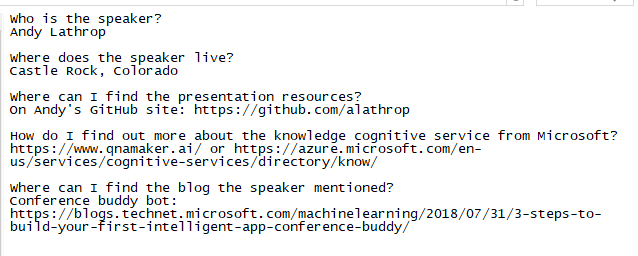

- Populate a knowledge base. Use the QnA Maker interface to add existing URLs or files with question and answer pairs. There are lots of options for data sources – so no need to re-create existing FAQs! I spent 5 minutes typing some simple questions and answers into a plain text file and it worked perfectly. You can also manually add Q and A pairs in the interface.

- Connect your service to your knowledge base. This aggregates the sources you provide to create one knowledge base and enables an API (application programming interface) endpoint – usable in your own applications, third party chat channels like Skype, Slack, and Facebook Messenger, or with something pre-built like a Microsoft Bot.

Once you’ve completed these steps, you’re guided to other activities like testing and training your knowledge base, or connecting to a bot. Let’s look at both.

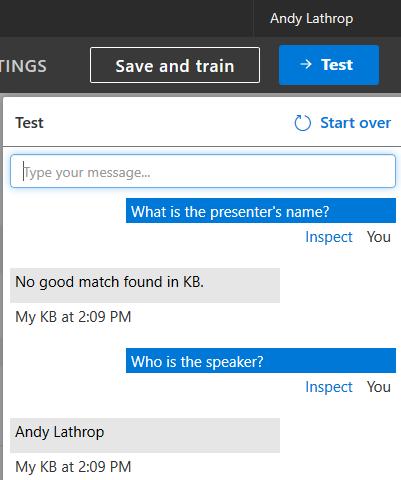

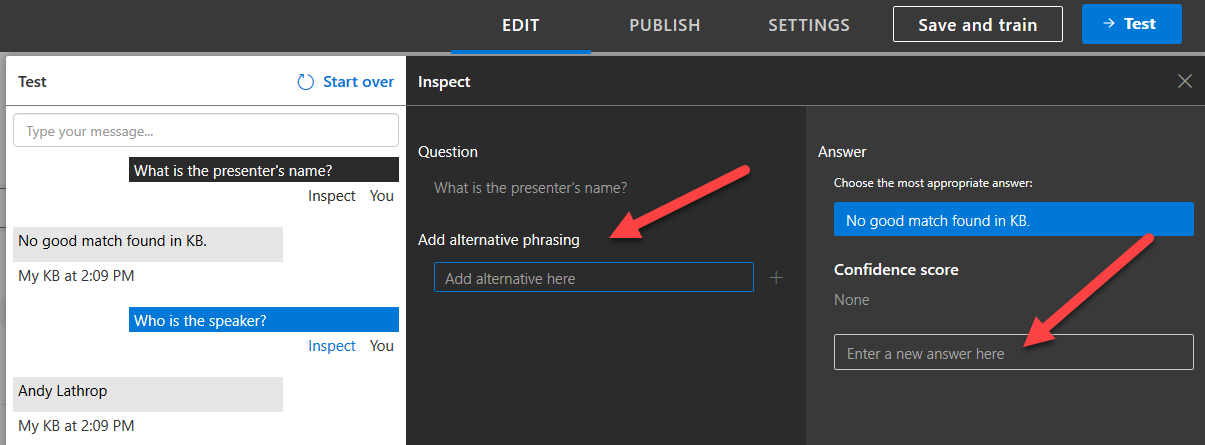

You’ll notice in my sample questions and answers in the screen shot above that I have a question “Who is the speaker?” Using the test feature in the QnA portal, I can get the correct answer. But modifying the question to “What is the presenter’s name?” provides no good match.

Using the UI, I can easily provide an answer to this new question and/or provide alternative phrasing for the question. Adding my name as a new answer worked as expected! You can also update your documents and refresh – so it’s easy to update your knowledge base with files, or through the UI.

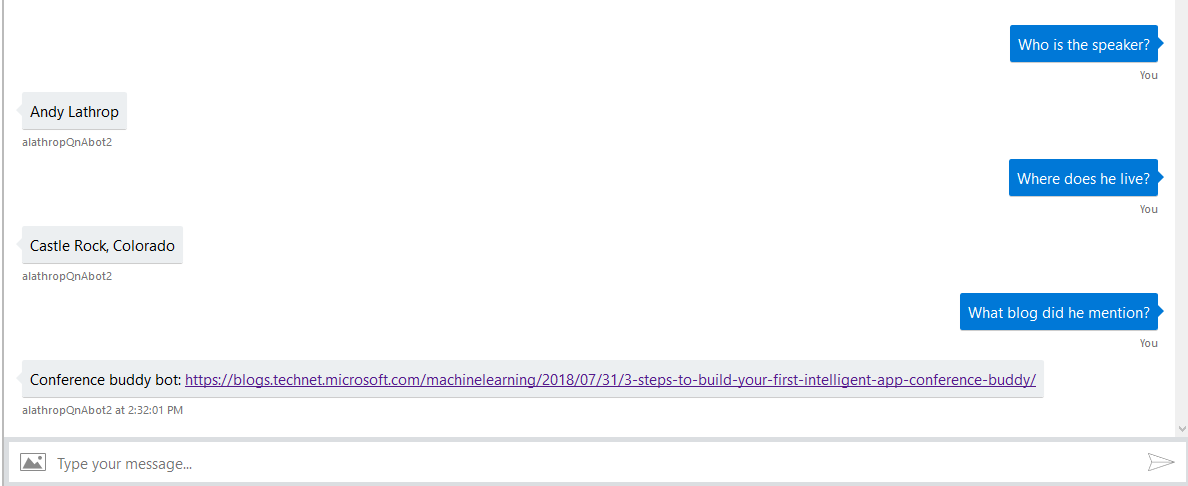

Now, what about making the Knowledge service available to users interactively? This is a job for a bot. Once I finished making my Knowledge service, I was provided instructions to build a QnA Bot. I did this on the Azure portal, and from there I was able to connect my shiny new bot to my existing QnA Maker service and test my knowledge base. There’s also great documentation for connecting your bot to channels like Facebook, Skype, Slack, and others.

Whew! That’s quite a bit just to learn to crawl. But going through the QnA Maker creation process was relatively simple, and very rewarding. I can already see how I might use this on a current DevOps project to provide quick project answers across business, IT, development, and data science teams. The Walk and Run sections are shorter, but demonstrate how we can group multiple Azure components together with the Knowledge service.

Walk

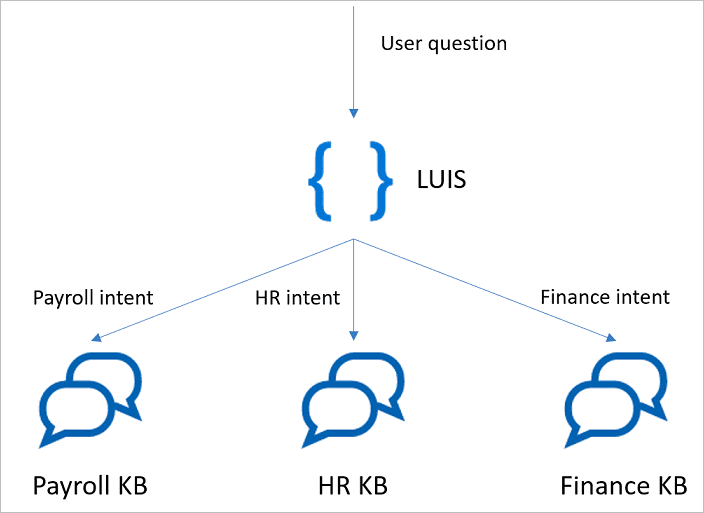

Once you’ve seen a knowledge base in action, you might wonder, “What about a QnA service when there are many different domains of knowledge – such as separate business units or specialty areas?” Glad you asked! Within the service, you certainly could create one knowledge base with all the disparate domain content, but that could be difficult to maintain among a diverse set of curators. Azure has a better way, using the LUIS (Language Understanding) service.

In the diagram above, several areas of knowledge, such as Payroll, HR, and Finance, all have separate knowledge bases (KB) within a single QnA service. To provide a single point of access to all three KBs, LUIS is used to take an initial user question and determine where it should be routed. This is a great example of the power of AI; to take a natural language question and distill it into a smaller “package” – like a single word or phrase – so that it can be related more easily to other data. In this case, a LUIS service is trained to recognize these distinct areas, and then call the appropriate knowledge base endpoint. As you might expect, all of this can be handled seamlessly within one Azure bot. This is a simple but powerful example of an AI Oriented Architecture. You can read more about integrating LUIS and QnA here.

Run

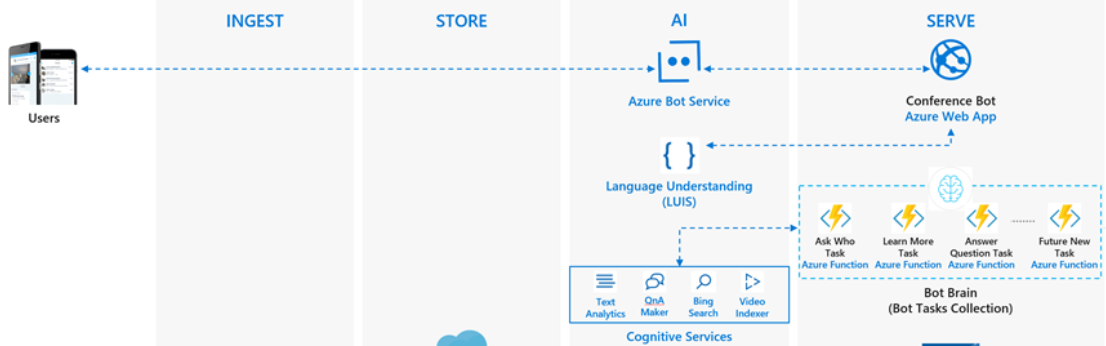

Our final example is the most comprehensive use of the Knowledge Cognitive Service paired with other Azure components, and was recently featured in a MS Machine Learning blog about building a Conference Buddy intelligent app.

The scenario: users at a conference presentation wish to learn more about the speaker and/or the speaker’s content, but don’t have the opportunity to directly ask “live” questions. For example, an attendee might want to access the speaker’s bio by asking a question in a simple interface, rather than following a series of links on web pages. Or attendees may ask questions about the presentation topic that weren’t covered during the talk and want focused results, like a video that just plays the portion relevant to their question. The speaker also wants to know what questions the audience is asking.

All of this can be handled by grouping the Knowledge service with LUIS and other services like Bing Search, Text Analytics, and Video Indexer, then presented to audience members in a chat (bot) interface. The speaker can have a dashboard to monitor all the questions in real time. The bot greets attendees like this:

Behind the scenes, there are multiple services configured to handle the different question types. You can review the full architecture on the blog, but it is analogous to our previous example of having LUIS route the question intent to the appropriate knowledge base within the Knowledge service. In this example, however, we use LUIS to route among multiple Cognitive Services and Azure functions.

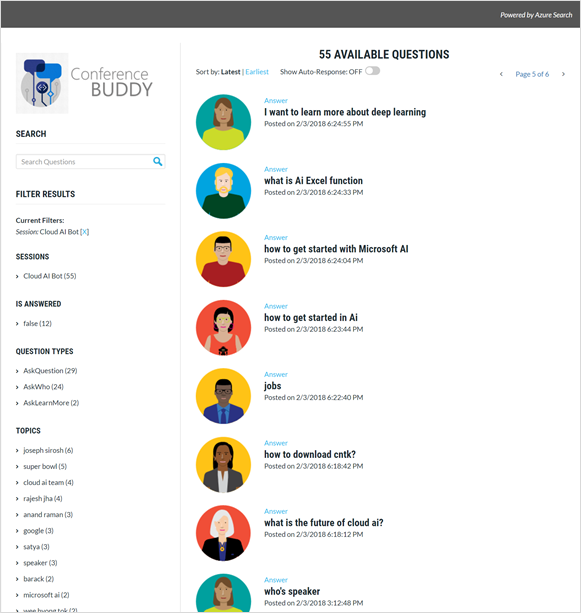

Finally, a speaker’s dashboard can be built using an Azure web app to provide insights from attendees’ questions. This could be shown on stage, provided to users, or just used by the moderator(s) to gauge interest and participation.

That’s it! I hope this helped familiarize you with the power of Cognitive Services in general, and the Knowledge service specifically, in a variety of ways.