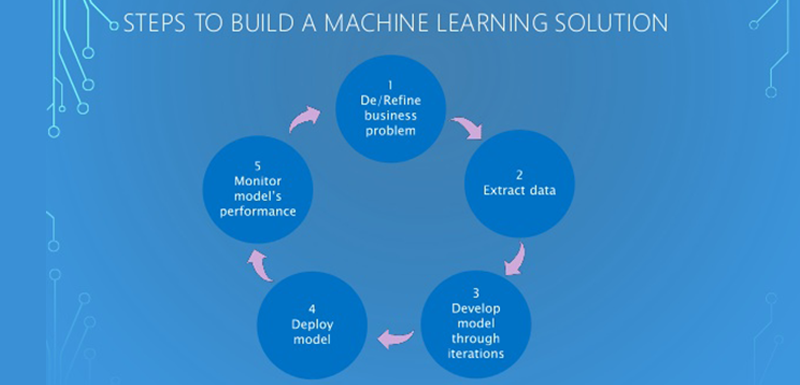

We live in a time when technology can do amazing things. Computers are now performing tasks that have historically required a trained human expert, and machines can do it all faster, cheaper, and with greater consistency. An ATM can read a checking deposit without the need for a teller; cars can anticipate accidents and apply the brakes early; insurance companies are even settling claims with just chatbots and image processing.

You may have seen use cases like these and wondered how your own business could be boosted with machine learning and advanced analytics. Perhaps you’ve even gotten ambitious enough to try a training course on data science, only to find the materials quickly get into higher-level mathematics and advanced programming exercises. Or maybe you’ve decided to hire some data scientists, but their lack of industry knowledge and reliance on technical jargon make it difficult to get meaningful results.

Microsoft’s Azure Machine Learning (“AzureML”) platform can provide the middle ground between unlocking the power of data science and doing it in a way that makes sense to the business. AzureML is the data science offering in Microsoft’s Azure suite of cloud-based products. It uses a graphical user interface to guide users through the machine learning process. Since it’s part of the Azure family, AzureML integrates with Azure’s cloud storage and data processing capabilities. It also offers the ability to publish models as a web service, so you can send data from your existing applications to AzureML to get suggestions or predictions quickly. In this post, I’ll walk through a basic machine learning process and how Azure Machine Learning enables you to get those insights.

Step 1: Data Preparation

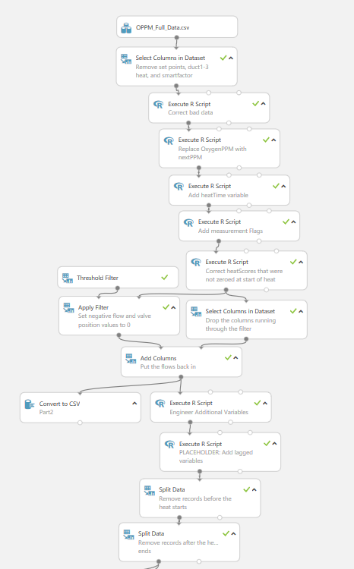

By far the most time-consuming phase of any data science project is preparing the data. AzureML has several utilities to facilitate this process. You can filter data, join datasets together, perform arithmetic among the fields in your dataset, and split the data into multiple datasets. AzureML can even automatically identify outliers in the data for removal. If the native AzureML modules don’t do exactly what you need them to do, AzureML supports the use of R, Python, or SQL modules to perform more advanced operations. Each step you take in preparing your data is represented in the user interface with a rectangular module. To make navigating your data pipeline easier, you can add comments to these modules, so you know exactly what each one does.

An AzureML pipeline can consist of several steps to filter, join, and aggregate data to prepare for model development.

Step 2: Model Design

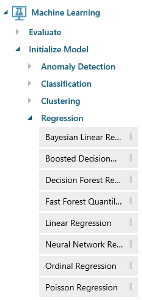

The type of model you want to use depends on what you are trying to predict. AzureML has built-in capabilities to run several common machine learning algorithms to design a model that fits your needs. The library of available methods ranges from very simple (e.g., linear regression) to highly complex and customizable (e.g., convolutional neural networks). Each model type has a few parameters for the user to set, and if you’re ever unsure of how these methods operate or what the parameters mean, you can always click the Help button for a description. For more advanced users, AzureML also includes the ability to cross-validate using different parameter settings to fine-tune your model’s performance.

AzureML has several popular learning algorithms pre-loaded for use.

Step 3: Model Development

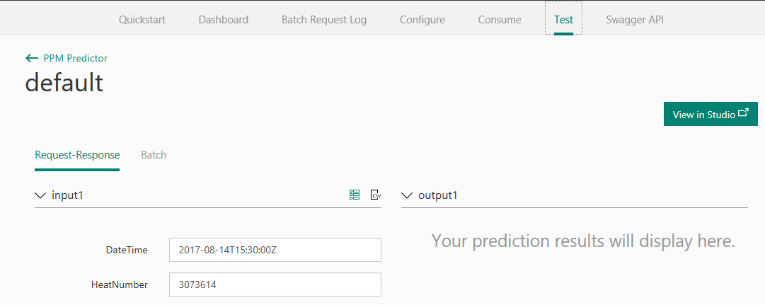

Once your model has been designed, it can be published in a common web service format so your other applications can access your predictions to augment your reporting and decision-making capabilities. Data can be sent across for predictions in real-time, and AzureML will update its predictions for each new piece of information. Since AzureML is part of the Azure family of products, there are additional options for how your data can be sent and processed if it’s stored in the Azure cloud.

When the model is ready to be used, AzureML has a portal that allows you to configure and test your model. It also provides sample code to guide your developers.

This post has given a very high-level overview of the machine learning process, but there are several other considerations that go into building a robust and valuable data science practice. How will predictive use cases be prioritized and delivered? How will models be governed? What is the review and maintenance schedule for your models? It can be tough to know where to start, but 3Cloud can help.