Intro to Data Factory v2

Azure Data Factory (ADF) version 2 (v2) is a game changer for enterprise integration and workflow orchestration. For those of you who aren’t familiar with data factory:

“It is a cloud-based data integration service that allows you to create data-driven workflows in the cloud for orchestrating and automating data movement and data transformation. Using Azure Data Factory, you can create and schedule data-driven workflows (called pipelines) that can ingest data from disparate data stores. It can process and transform the data by using compute services such as Azure HDInsight Hadoop, Spark, Azure Data Lake Analytics, and Azure Machine Learning.

Additionally, you can publish output data to data stores such as Azure SQL Data Warehouse for business intelligence (BI) applications to consume. Ultimately, through Azure Data Factory, raw data can be organized into meaningful data stores and data lakes for better business decisions.” (found here)

Data Factory v1 vs v2

ADF version 1 (v1) was designed predominantly for the “modern data platform”, i.e., data lake architecture and big data tooling in Azure. Microsoft accepted the feedback from its customers and partners to develop v2 and redefine “modern” to include existing on-premises infrastructure and batch orchestration needs. If you haven’t taken the time to explore what’s coming in v2, please take this as your wake-up call. Here are some of the exciting capabilities that v2 offers:

- Linked services now execute under an integration runtime that can be hosted on premises or in the cloud.

- A cloud-hosted SSIS integration runtime allows for lift-and-shift and scale out of on-premises SSIS solutions.

- Schedule triggers enable pipeline execution based on a “wall clock”. The output of the pipeline is no longer expected to be contiguous and non-overlapping.

- Control flow activities enable conditional workflow logic (looping and branching) like SSIS.

- Expressions based on trigger provided parameters. Again, very similar to SSIS.

- Custom Activities can run against any executable program on Windows or Linux.

Version 2 custom activity –– run any executable

The ability to define custom activities against any executable program in v2 means we can be platform agnostic when orchestrating data in the cloud. If a process can run on a single machine in Windows or Linux, ADF v2 can integrate it into a pipeline via the cloud-hosted integration runtime and the Azure Batch service. (If you need custom distributed jobs, those are available through HDInsight, Data Lake Analytics, and the now generally available Azure Databricks linked services). In ADF v1, custom activities are limited to .NET solutions that specifically target Framework 4.5.2, and which implement the Execute method of the IDotNetActivity interface. This means you must do a lot of refactoring if modeling off existing solutions, as well as implement additional program(s) to debug locally.

Integrating with Microsoft AI platform Cognitive Services

So, now that we’ve been set free to use any executable when looking for solved problems, let’s consider the possibilities for integrating modern solutions into enterprise workflow orchestration. For inspiration, I looked to Microsoft AI Cognitive services – extremely powerful AI solutions that Microsoft maintains and packages as APIs for developers to integrate into their own applications. Here I stumbled across the Translator Text API as well as a related GitHub project. With some simple refactoring of the base classes, I was able to transform this windows application built on .NET 4.6.1 into a console executable that ADF v2 can call at runtime and use to read/write directly against Azure blob storage*. I now have a batch process that can take Microsoft Office, plain text, HTML, PDF and SRT caption files and translate them to and from over 60 different languages (including Klingon). Potentially more useful than the Klingon part, you can further extend the functionality of translator text API via the Microsoft Translator Hub, so that translations specific to your business domain can be included, and the specific communication style of translation used in your enterprise can be learned as well.

*Technical note: Microsoft Document Translator can be called from command line and run against local storage, meaning I could have simply installed it on a batch pool node and used dedicated attached storage instead with no refactoring required at all. I just preferred to use blob storage for this demo.

Sample Solution

The basic plumbing for an ADF v2 custom activity is documented here. The sample I am using is in C#, but anything that executes on Windows or Linux OS is fair game: Python, Java, Scala, etc. The key components for batch execution are to consume the activity.json, linkedServices.json, and (optional) datasets.json files that ADF v2 adds to the runtime directory when calling the executable. This is where all the system parameters and/or extended properties of your activity are passed to the executable in code.

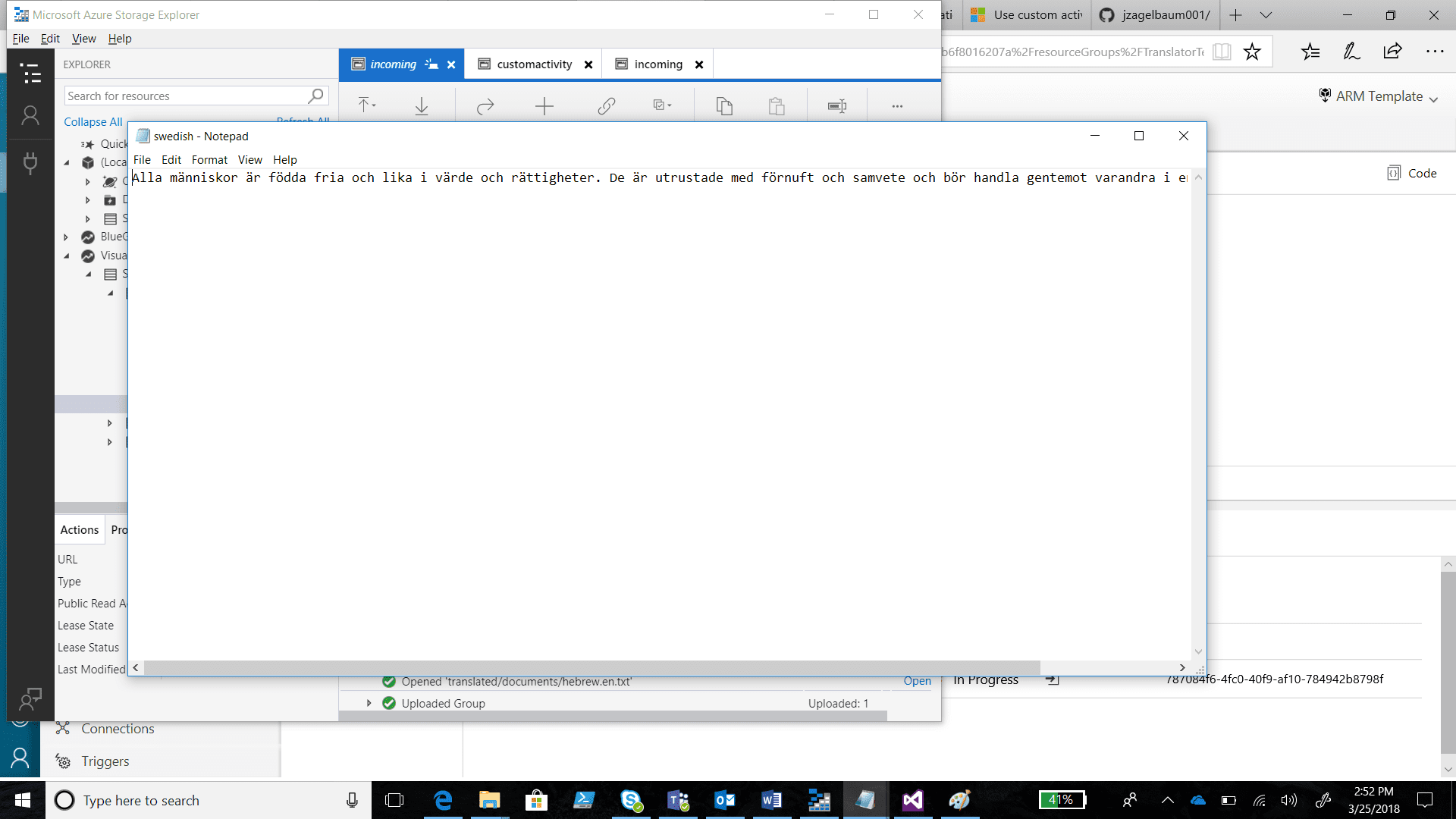

The sample solution is available here. When successfully deployed and executed, the custom activity iterates over all documents in a blob container, auto identifies the source language and translates the documents to English, writing to a translated folder in the same blob container. Here are some sample screen shots:

Custom ADF v2 activity successfully ran in the visual editor.

Potential Use Cases

This pattern could just as easily be extended to include any of the other language APIs available in cognitive services. Likewise, we can apply this pattern to other blobs like images and sound recordings utilizing the vision or speech APIs, respectively. Some potential solutions we can now build as a batch process:

- Identifying who is speaking and appropriately tag the file

- Tag images as well as add descriptive text

- Sentiment and key phrase extraction from text

- Identify specific people and / or emotions in images

Many of these tasks are done manually by third parties in an enterprise setting, or simply not done at all. The cost benefit and quality improvement of switching to a Cognitive Services API can be significant and a natural first step for bringing AI to your organization.

If you’re interested in seeing how Azure and Microsoft AI can enable insights and streamline operations in your organization, please contact us. We’re happy to help.