With Power BI Premium and the Capacity Metrics App, you can track metadata such as refresh statistics of your reports and data sources, as well as additional detail regarding CPU usage, memory usage, and average wait times for a dataset to refresh. Refresh data can be invaluable for users monitoring the health of their workspace and troubleshooting issues as well as IT leaders ensuring proper SLA standards from ETL to the Power BI Report. It also provides transparency and trust to business users to know that the data they are viewing is up to date.

However, not all organizations can leverage Power BI Premium for this feature. So how can we replicate this framework to provide users similar information on their report workspaces and datasets as well as automate this process regularly to provide the most up to date information? This guide will provide you the steps you need to show what is possible! We will need the following tools and prerequisites before proceeding.

- Azure Active Directory

- Power BI App Registration with a Client ID/Secret

- Security Group to Add App Registration Created

- PowerAutomate

- To automate data collection from the REST APIs and storage to Azure SQL

- Azure SQL

- Storage and update refresh data

- Housing of metadata tables to iterate through refreshes

- Key Objects

- Table to Store Metadata (Power BI Objects)

- Table to Update Refresh History (Power BI Refresh History)

- Stored Procedure to collect refresh data and merge refresh history with the fact table

- Power BI Service

- Service Principal Authentication Enabled with the security group (Admin Settings)

- O365 Service Account is at least a member/admin to all necessary workspaces for Power Automate

- Service Principal is in the workspaces where artifacts need monitoring

- Azure Key Vault

- Securely store client ID and secrets

- O365 Service Account

- Dedicated Service Account to perform automation of all tasks in Power Automate.

- Other

- Knowledge of API testing and sending GET/POST requests

Power BI REST APIs Have Entered the Chat

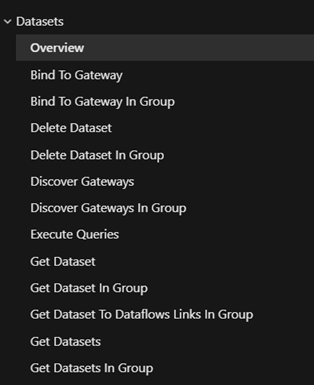

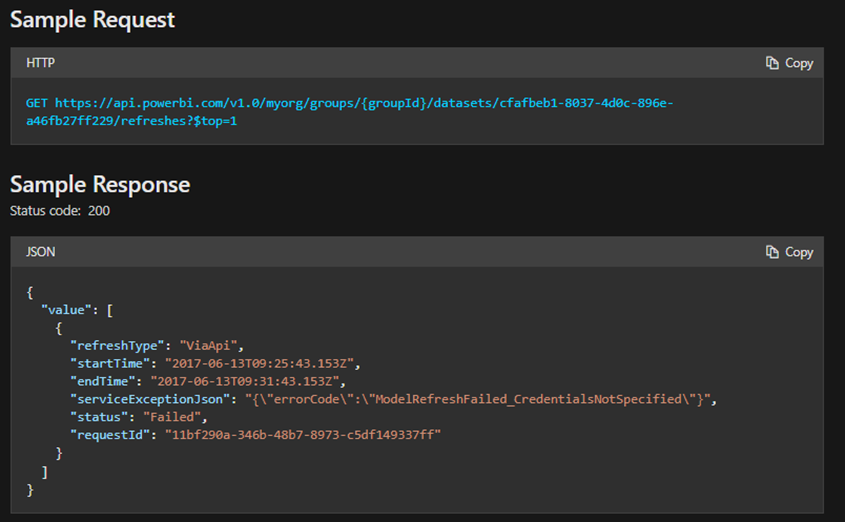

Power BI has provided a series of service endpoints where users can programmatically perform tasks ranging from embedding resources to tenant administration and managing Power BI content. To access these endpoints, you will need to create both an App Registration for Power BI and a Security Group in Azure Active Directory to add the application. The following are some of the functionalities provided in the Datasets Section of the Rest API. For example, hitting the following URI link and getting the top 1 refresh criteria will return the most recent refresh in JSON format.

Store Metadata and Power BI Refresh Data in Azure SQL DB

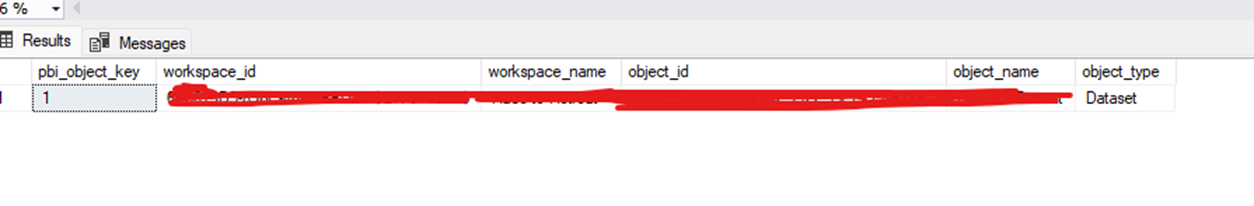

Since there are multiple Power BI datasets that require monitoring, it’s helpful to use a metadata-driven approach for collecting data. This avoids having to hard-code the solution for each dataset, and enforces consistency. The following database objects are required:

- PBI_Object table – stores metadata about datasets and dataflows that is required for API calls

- PBI_Refresh_History table – stores refresh history retrieved from the API

- PBI_Refresh_History view – provides data to Power BI for reporting. Some data processing is peformed here, such as time zone conversion, addition of a refresh duration column, etc.

- Load_PBI_Refresh_History stored procedure – receives JSON data from the API, converts it to rows and columns, and merges it into the PBI_Refresh_History table.

The Load_PBI_Refresh_History stored procedure is the star of the show here. Performing the JSON parsing in a stored procedure means that Power Automate doesn’t have to, and simplifies that part of the solution. All the application has to do is execute the stored procedure with raw JSON as a parameter. This also makes the solution more portable since executing a stored procedure is a standard process that can be done by numerous applications.

Using MERGE in the stored procedure is also important because frequent API calls can return overlapping data. MERGE ensures that our process is idempotent and that duplicates are not being loaded. You can find the queries to generate this table on our public GitHub repository!

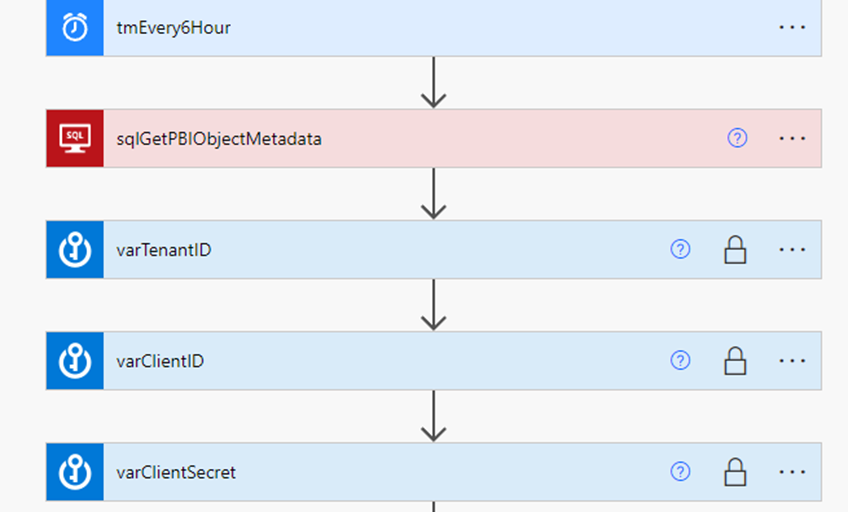

Power Automate Flow Part 1: Setting Parameters and Variables

In our flow, we’ve done the following:

- Created a recurring 6 hour trigger

- A SQL Step to get the rows from the Power BI Object table

- This will later be used to iterate in a for loop to pull refresh history data

- Get Key Vault App IDs and secrets from Azure Key Vault

- We’ve secured the input and output to ensure results in plaintext are not shown

For the demonstration we will have one dataset, but this table can have multiple artifacts to loop over can call the refresh history API.

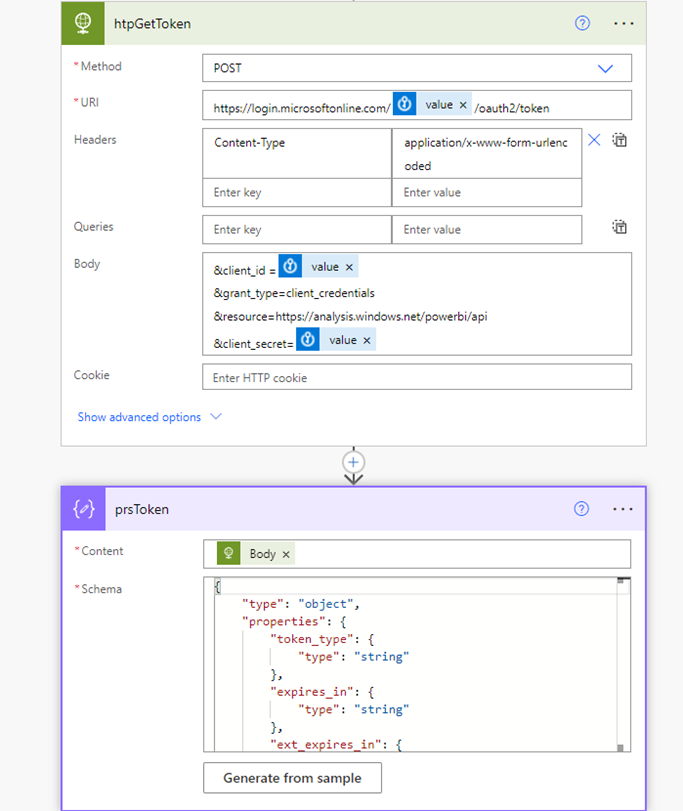

Power Automate Flow Part 2: Getting a Power BI Access Token from REST API

Leveraging the HTTP Connector and our Key Vault parameter, we then send a request to get an access token to perform additional requests to the Power BI REST API.

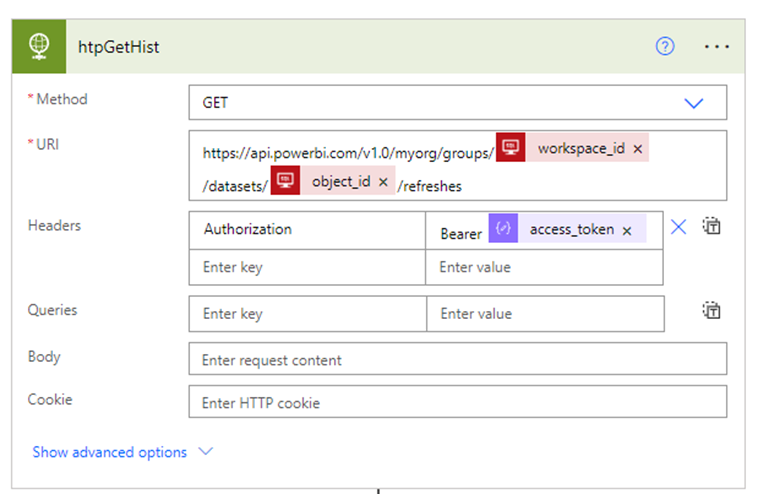

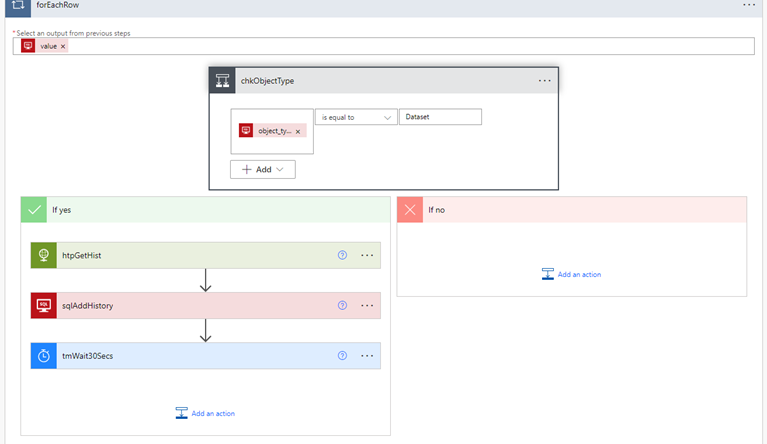

Power Automate Flow Part 3: Looping Through Row GUIDs and Loading to our History Table

Now that we have a token, we can start looping through each row we collected from our Object Table in our database and pass a GET request for the refresh history for each dataset (shown below). We can also add a control flow statements to branch out to different artifacts in our Power BI tenant like dataflows, but for this example we’ve left the no side of the flow alone.

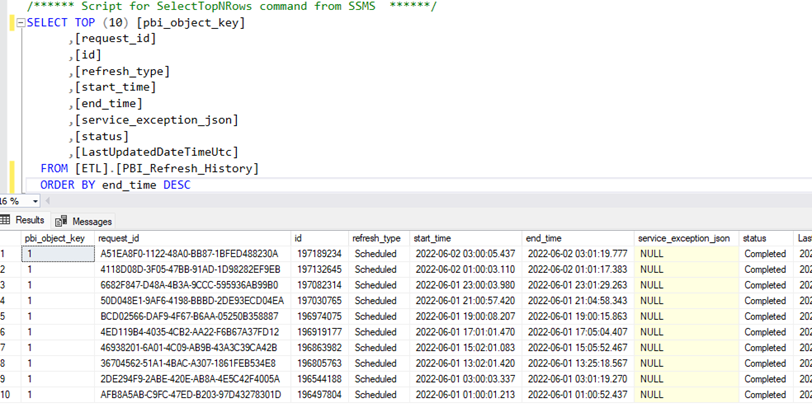

Once we get a response, we then take that and call the stored procedure to upload the history JSON generated from the GET Request. We then wait 30 seconds to avoid sending multiple requests and potential API throttling. If we go to our history table, we can then see that the refresh history has successfully been loaded into the database!

Conclusion and Extensions

Using Power Automate, Azure SQL, and the Power BI REST API, we created a framework to house a wide variety of metadata and information provided. In this example, we used the Get Refresh History to pull refresh data programmatically for a Power BI dataset and store it in our Azure SQL tables. From here, users can then build reports on top of this data to audit and troubleshoot Power BI refresh issues that occur in their tenant.

As well as that, this framework also lends itself to other applications of the Power BI REST API + Power Automate such as:

- Pulling history information from other artifacts (dataflows, etc.)

- Calling other APIs and storing their results in Azure SQL

- Adding Orchestration of Refreshes through Power Automate and the REST API and integration with a broader ETL process

- e. can we send updates to users on refresh status of Power BI and timings?

Connect with Us!

Please reach out to us today to find out more about how BlueGranite’s talented team can help you bring clarity and efficiency to your Modern Analytics endeavors today!

BlueGranite offers a variety of resources and free training events to help you learn how you can leverage Modern Data Analytics.

Please visit our website and YouTube Channel to learn more, or contact us directly to see how we can help you explore your about modern data analytics options and accelerate your business value.

About the Authors

Nithin is a Solution Consultant at 3Cloud with experience working with stakeholders in the healthcare, hospitality, and tourism industries to design and develop robust data-driven solutions across different service lines and business units. Nithin holds a B.S. in Industrial & Systems Engineering from the University of Florida and is currently pursuing a M.S. in Analytics from the Georgia Institute of Technology. Check him out on LinkedIn!

Chris Koester is a Technical Architect at 3Cloud. He has been working with data since 2008, with a wide range of experience in analytics. His current focus is the design and development of modern data platform solutions in Azure. Chris holds a Bachelor of Science degree in Informatics from Indiana University. Check him out on LinkedIn!